Clarisse submitter reference¶

Warning

Due to Isotropix's discontinuation of Clarisse, Conductor will be deprecating Clarisse rendering on March 29, 2024. Please reach out to Conductor Support for any questions or concerns.

The Conductor submitter for Clarisse allows you to ship renders to Conductor from a familiar interface within Clarisse. It's implemented as a custom class that lives inside the project. The class name is ConductorJob.

You may configure several ConductorJobs in a single project. A single job may also be set up to render many images.

Any properties you set on a ConductorJob will be stored inside the project when you save.

This document is a reference for all the attributes and functionality of the plugin. If you want to get up and running fast, head over to the Clarisse submitter tutorial page .

Install and register the plugin¶

If you haven't already done so, Download the Companion App.

Note

The Clarisse submitter is no longer available through Conductor Companion. Clarisse rendering for current customers will continue to be supported until March 29, 2024.

To install from Companion App.¶

Open the Companion on the plugins page and install or upgrade the desired plugin. The default installation directory is $HOME/Conductor . Once the installation is complete, you'll see a panel with instructions to register the plugin.

To install directly from PyPi.¶

All Conductor Client tools are Python packages. If you are in a studio environment and want to automate the installation, or if the Companion failed to install, you can install directly from PyPi (Python Package Index) using PIP on the command-line.

The instructions below install the Clarisse submitter into a folder called Conductor in your home directory.

# Use a variable to hold the install location

INSTALL_DIR=$HOME/Conductor

# Make the directory, in case it doesn't exist

mkdir -p $INSTALL_DIR

# Install the plugin

pip install --upgrade cioclarisse --target=$INSTALL_DIR

# Run a post-install script that lets Clarisse know about the plugin's location.

python $INSTALL_DIR/cioclarisse/post_install.py

SET INSTALL_DIR=%userprofile%/Conductor

mkdir %INSTALL_DIR%

pip install --upgrade cioclarisse --target=%INSTALL_DIR%

python %INSTALL_DIR%/cioclarisse/post_install.py

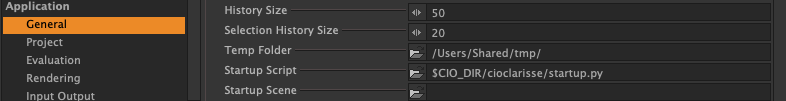

To register the submitter in Clarisse¶

Set the path to the provided startup in the Startup Script section of the preferences panel. It takes effect the next time you start Clarisse.

$CIO_DIR/cioclarisse/startup.py

Note

CIO_DIR is a variable in your clarisse.env file that points to the Conductor plugins location.

Attributes¶

title¶

The title that appears in the Conductor dashboard. You may find it useful to use Clarisse's PNAME variable in an expression to keep it in sync with the project name. For example:

Clarisse - $PNAME

images¶

Images or layers to be rendered. Images must have the Render to Disk attribute set and the Save As field must contain a filename.

conductor_project_name¶

The Conductor project. The dropdown menu is populated or updated when the submitter connects to your Conductor account. If the menu contains only the - Not Connected - option, then press the Connect button.

clarisse_version¶

Choose the version of Clarisse to run on the render nodes.

use_custom_frames¶

Activate a text field to enter a custom frame list. When set, the frame ranges on connected image items will be ignored and the custom frames will be used for all. If you leave use_custom_frames off, then each image may specify it's own range and they may be different from each other. By default, the render command generated for each task renders all images together and calculates the correct frames to render for each image within the chunk.

custom_frames¶

The set of frames to render when use_custom_frames is on. To specify the set of frames enter a comma-separated list of arithmetic progressions. In most cases, this will be s simple range.

1001-1200

However, any set of frames may be specified efficiently in this way, including negative frames.

-20--15,1,7,10-20,30-60x3,1001

chunk_size¶

A chunk is the set of frames handled by one task. If your renders are fairly fast, it may make sense to render many frames per task, because the time it takes to spin up instances and sync can be significant by comparison.

use_scout_frames¶

Activates a set of frames to be rendered first. This allows you to check a subsample of frames before committing to the full render.

scout_frames¶

Scout-frames to render. When the submission reaches Conductor, only those tasks containing the specified scout frames are started. Other tasks are set to a holding state.

Note

If chunk_size is greater than one, you will likely find extra frames rendered that were not listed as scout frames. As the smallest unit of execution is a task, there is no way to specify that part of a task should be started and another part held. Therefore all frames are rendered in any task that contains any scout frame.

tiles¶

Render tiles. Split each frame across many machines.

instance_type¶

Specify the hardware configuration used to run your tasks. Higher specification instances are potentially faster and able to handle heavier scenes. You are encouraged to run tests to find the most cost-efficient combination that meets your deadline.

preemptible¶

Preemptible instances are less expensive to run than non-preemptible. The drawback is that they may be stopped at any time by the cloud provider. The probability of an instance being preempted rises with the duration of the task. Conductor does not support checkpointing, so if a task is preempted it is started from scratch on another instance. It is possible to change the preemptible setting in the dashboard for your account.

retries¶

A task may fail if the command returns a non-zero exit code or is preempted by the cloud provider. Set a value above 0 if you would like to try the task again in either of these situations automatically.

dependency_scan_policy¶

Specify how to find files that the project depends on. Your project is likely to contain references to external textures and geometry caches. These files all need to be uploaded. The dependency-scan searches for these files at the time you generate a preview or submit a job. There are 3 options.

-

No Scan. No scan will be performed. This may be useful if the scanning process is slow for your project. You can instead choose to cache the list of dependencies. See manage_extra_uploads. If you choose this method, you should be aware when new textures or other dependencies are added to your project, and add them to the upload list manually.

-

Smart Sequence. When set, an attempt is made to identify for upload, only those files needed by the frames being rendered. For example, suppose you have a sequence of 1000 background images on disk. Your shot is 20 frames long and you've set the sequence attributes on the texture map to start 100 frames in. (-100 frame offset). The smart sequence option will find frames from 0101 to 0120. Filenames are searched for two patterns that indicate a time-varying component:

###and$4F.- If any number of hashes are found in a filename, then the list of files to upload is calculated based on the frames set in the ConductorJob, and the sequence attributes associated with the filename.

- Likewise, if $F variables are found, the list of files will reflect the frames as specified in the ConductorJob. However, expressions such as

$F * 2are not resolved.

-

Glob. Find all files that exist on disk that could match either of the two time-varying patterns. If for example, your shot is 50 frames, but you have 100 images on disk, then a glob scan will find and upload all those images even though half of them are not used.

local_upload¶

Upload files from within the Clarisse session, as opposed to using an upload daemon. If you have a large number of assets to upload, it will block Clarisse until it finishes uploading.

A better solution may be to turn on Use Upload Daemon. An upload daemon is a separate background process. It means assets are not uploaded in the application. The submission, including the list of expected assets, is sent to Conductor, and the upload daemon continually asks the server if there are assets to upload. When your job hits the server, the upload daemon will get the list and upload them, which allows you to continue with your work.

You can start the upload daemon either before or after you submit the job. Once started, it will listen to your entire account, and you can submit as many jobs as you like.

Note

You must have Conductor Core installed in order to use the upload daemon and other command line tools. See the installation page for options.

To run an upload daemon, open a terminal or command prompt, and run the following command.

conductor uploader

Once started, the upload daemon runs continuously and uploads files for all jobs submitted to your account.

upload_only¶

Submit a job with no tasks. This can be useful in a pipeline for artists who create assets but do not submit shots. If a texture artist submits an upload only job, then by the time a lighting artist needs to test render, all the assets have been uploaded.

manage_extra_uploads¶

Opens a panel to browse or scan for files to upload. If any files are not found by the dependency scan at submission time, they may be added here. They will be saved on the extra_uploads attribute.

extra_uploads¶

Files to be uploaded in addition to any files found by dependency scanning.

manage_extra_environment¶

By default, your job's environment variables are defined automatically based on the software and plugin versions you choose. Sometimes, however, it can be necessary to append to those variables or add more of your own.

For example, you may have a script you want to upload and run without entering its full path. In that case, you can add its location to the PATH variable.

Add an entry with the Add button and enter the Name of the variable: PATH, the Value /my/scripts/directory. Make sure Exclusive is switched off to indicate that the variable should be appended.

You can also enter local environment variables in the value field itself. They will then be active in the submission. You might use $MY_SCRIPTS_PATH (if it's defined) for the value in the above example.

task_template¶

A template for the commands to run on remote instances. The template may use tokens enclosed in angle brackets. They are intended for use only in ConductorJob items. If you examine the task template and then click the Preflight button, you'll see how the tokens are resolved. See the table at the end of this document for a full list of available tokens, and the scopes in which they are valid.

notify¶

Add one or more email addresses, separated by commas to receive an email when the job completes.

show_tracebacks¶

Show a full stack-trace for software errors in the submitter.

conductor_log_level¶

Set the log level for Conductor's library logging.

Tokens¶

Tokens exist at four different scopes:

- Global. The same value for all Conductor job items. Example

tmp_dir. - Job. A different vaue for each job. Example

sequence - Task. A different vaue for each generated task. Example

chunks - Tile. A different vaue for each tile. Example

tile

Below is the full list of Conductor tokens.

| Token name | Example value | Scope |

|---|---|---|

| sequencelength | 10 | Job |

| sequence | 1-10 | Job |

| sequencemin | 1 | Job |

| sequencemax | 10 | Job |

| cores | 2 | Job |

| flavor | standard | Job |

| instance | 2 core, 7.50GB Mem | Job |

| preemptible | preemptible | Job |

| retries | 3 | Job |

| job | conductor_job_item_name | Job |

| sources | project://scene/image1 project://scene/image2 | Job |

| scout | 3-8x5 | Job |

| chunksize | 2 | Job |

| chunkcount | 5 | Job |

| scoutcount | 2 | Job |

| render_package | "/path/to/project/shot.render" | Global |

| project | harry_potter | Job |

| chunks | 9:10 9:10 | Task |

| chunklength | 2 | Task |

| chunkstart | 9 | Task |

| chunkend | 10 | Task |

| directories | "/path/to/renders/layerA" "/path/to/renders/layerB" | Job |

| pdir | /path/to/project | Global |

| temp_dir | "/path/to/temp/directory" | Global |

| tiles | 9 | Job |

| tile_number | 1 | Tile |